Voice input methods are not just about being “fast”—they are becoming a brand new gateway for developers to collaborate with AI.

AI Voice Input Methods Are Becoming the “New Shortcut Key” in the Programming Era

I am increasingly convinced of one thing: PC-based AI voice input methods are evolving from mere “input tools” into the foundational interaction layer for the era of programming and AI collaboration.

It’s not just about typing faster—it determines how you deliver your intent to the system, whether you’re writing documentation, code, or collaborating with AI in IDEs, terminals, or chat windows.

Because of this, the differences in voice input method experiences are far more significant than they appear on the surface.

My Six Evaluation Criteria for AI Voice Input Methods

After long-term, high-frequency use, I have developed a set of criteria to assess the real-world performance of AI voice input methods:

- Response speed: Does text appear quickly enough after pressing the shortcut to keep up with your thoughts?

- Continuous input stability: Does it remain reliable during extended use, or does it suddenly fail or miss recognition?

- Mixed Chinese-English and technical terms: Can it reliably handle code, paths, abbreviations, and product names?

- Developer friendliness: Is it truly designed for command line, IDE, and automation scenarios?

- Interaction restraint: Does it avoid introducing distracting features that interfere with input itself?

- Subscription and cost structure: Is it a standalone paid product, or can it be bundled with existing tool subscriptions?

Based on these criteria, I focused on comparing Miaoyan, Shandianshuo, and Zhipu AI Voice Input Method.

Miaoyan: Currently the Most “Developer-Oriented” Domestic Product

Miaoyan was the first domestic AI voice input method I used extensively, and it remains the one I am most willing to use continuously.

Command Mode: The Key Differentiator for Developer Productivity

It’s important to clarify that Miaoyan’s command mode is not about editing text via voice. Instead:

You describe your need in natural language, and the system directly generates an executable command-line command.

This is crucial for developers:

- It’s not just about input

- It’s about turning voice into an automation entry point

- Essentially, it connects voice to the CLI or toolchain

This design is clearly focused on engineering efficiency, not office document polishing.

Usage Experience Summary

- Fast response, nearly instant

- Output is relatively clean, with minimal guessing

- Interaction design is restrained, with no unnecessary concepts

- Developer-friendly mindset

But there are some practical limitations:

- It is a completely standalone product

- Requires a separate subscription

- Still in relatively small-scale use

From a product strategy perspective, it feels more like a “pure tool” than part of an ecosystem.

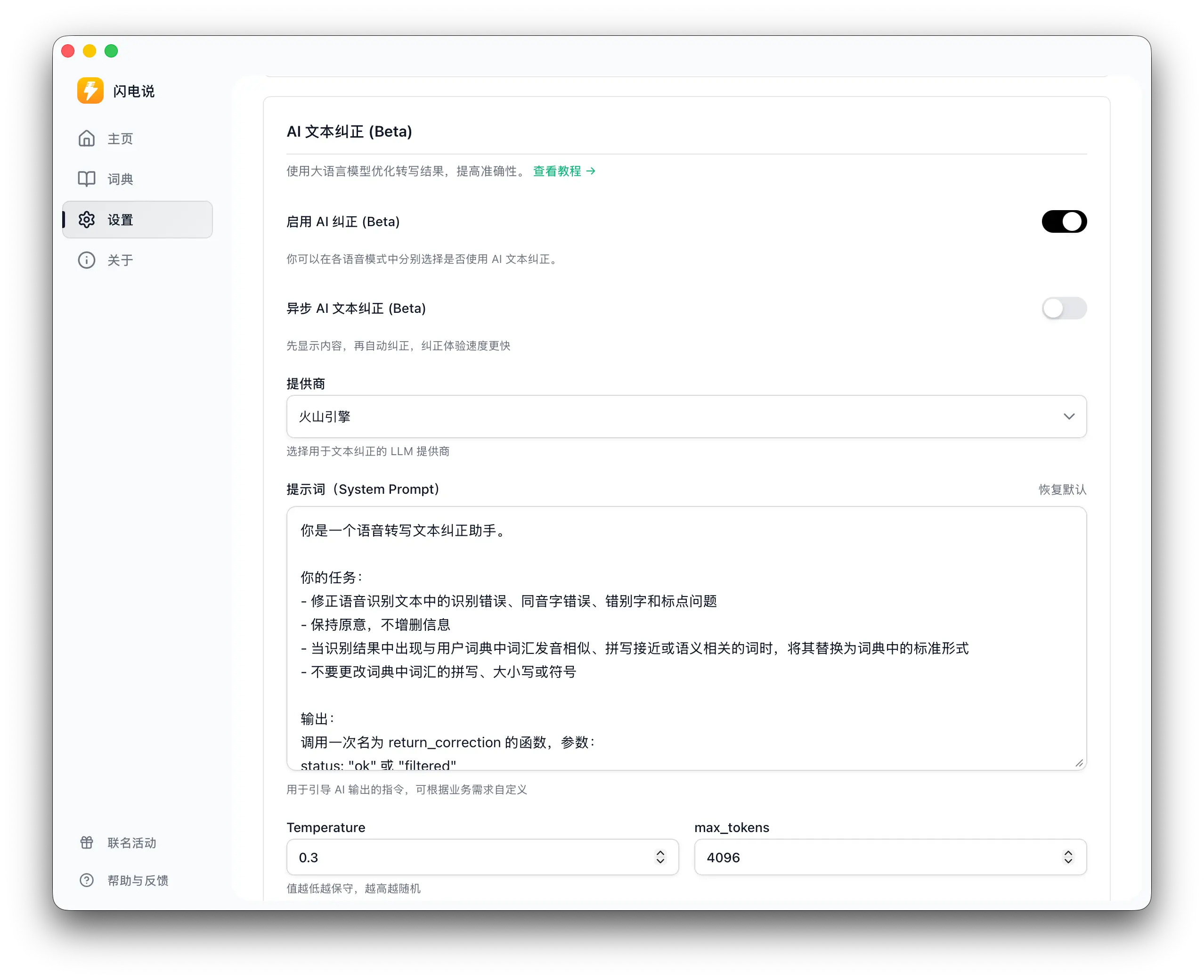

Shandianshuo: Local-First Approach, Developer Experience Depends on Your Setup

Shandianshuo takes a different approach: it treats voice input as a “local-first foundational capability,” emphasizing low latency and privacy (at least in its product narrative). The natural advantages of this approach are speed and controllable marginal costs, making it suitable as a “system capability” that’s always available, rather than a cloud service.

However, from a developer’s perspective, its upper limit often depends on “how you implement enhanced capabilities”:

If you only use it for basic transcription, the experience is more like a high-quality local input tool. But if you want better mixed Chinese-English input, technical term correction, symbol and formatting handling, the common approach is to add optional AI correction/enhancement capabilities, which usually requires extra configuration (such as providing your own API key or subscribing to enhanced features). The key trade-off here is not “can it be used,” but “how much configuration cost are you willing to pay for enhanced capabilities.”

If you want voice input to be a “lightweight, stable, non-intrusive” foundation, Shandianshuo is worth considering. But if your goal is to make voice input part of your developer workflow (such as command generation or executable actions), it needs to offer stronger productized design at the “command layer” and in terms of controllability.

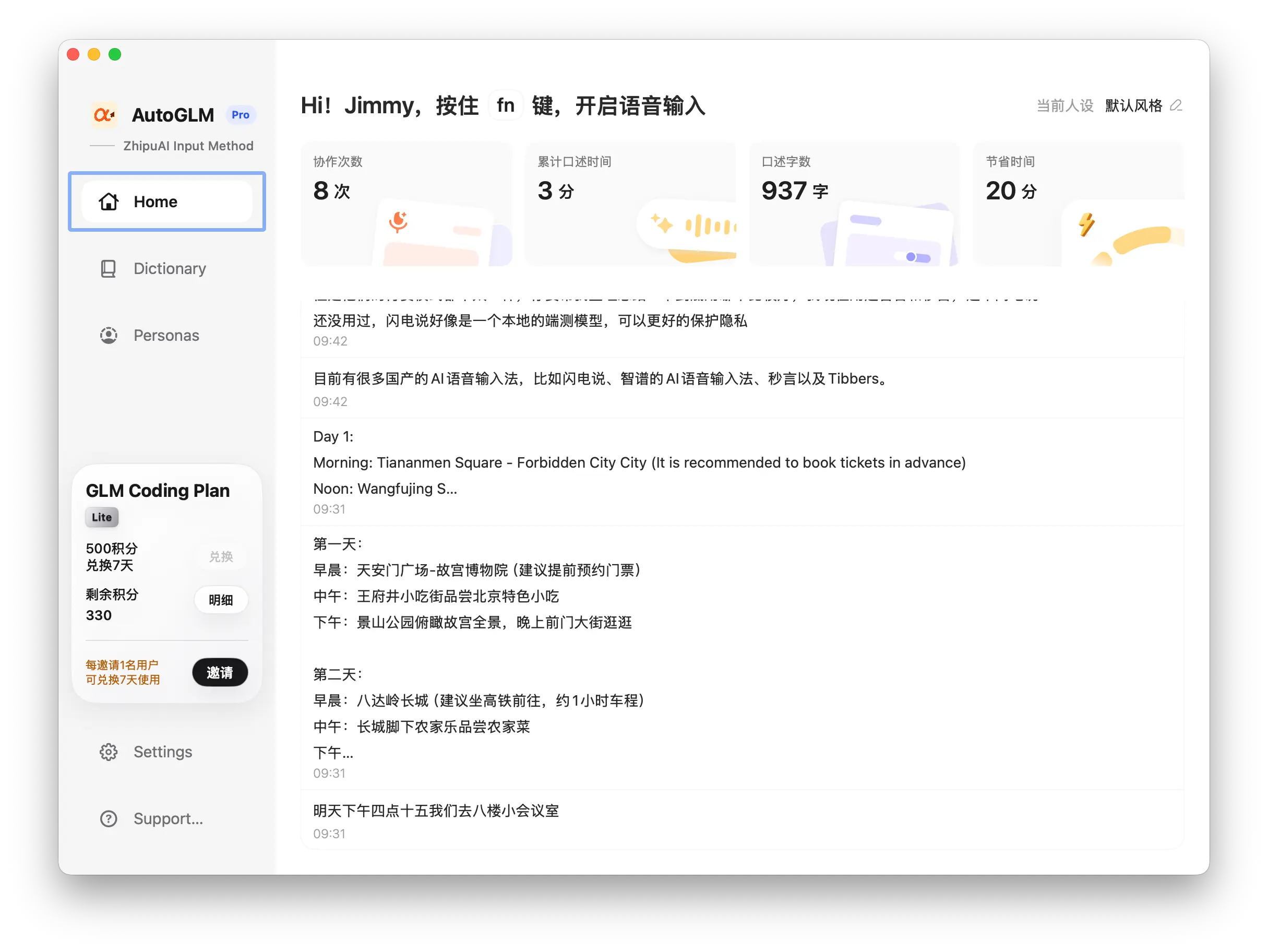

Zhipu AI Voice Input Method: Stable but with Friction

I also thoroughly tested the Zhipu AI Voice Input Method.

Its strengths include:

- More stable for long-term continuous input

- Rarely becomes completely unresponsive

- Good tolerance for longer Chinese input

But with frequent use, some issues stand out:

- Idle misrecognition: If you press the shortcut but don’t speak, it may output random characters, disrupting your input flow

- Occasionally messy output: Sometimes adds irrelevant words, making it less controllable than Miaoyan

- Basic recognition errors: For example, “Zhipu” being recognized as “Zhipu” (with a different character), which is a trust issue for professional users

- Feature-heavy design: Various tone and style features increase cognitive load

Subscription Bundling: Zhipu’s Practical Advantage

Although I prefer Miaoyan in terms of experience, Zhipu has a very practical advantage:

If you already subscribe to Zhipu’s programming package, the voice input method is included for free.

This means:

- No need to pay separately for the input method

- Lower psychological and decision-making cost

- More likely to become the “default tool” that stays

From a business perspective, this is a very smart strategy.

Main Comparison Table

The following table compares the three products across key dimensions for quick reference.

| Dimension | Miaoyan | Shandianshuo | Zhipu AI Voice Input Method |

|---|---|---|---|

| Response Speed | Fast, nearly instant | Usually fast (local-first) | Slightly slower than Miaoyan |

| Continuous Stability | Stable | Depends on setup and environment | Very stable |

| Idle Misrecognition | Rare | Generally restrained (varies by version) | Obvious: outputs characters even if silent |

| Output Cleanliness/Control | High | More like an “input tool” | Occasionally messy |

| Developer Differentiator | Natural language → executable command | Local-first / optional enhancements | Ecosystem-attached capabilities |

| Subscription & Cost | Standalone, separate purchase | Basic usable; enhancements often require setup/subscription | Bundled free with programming package |

| My Current Preference | Best experience | More like a “foundation approach” | Easy to keep but not clean enough |

User Loyalty to AI Voice Input Methods

The switching cost for voice input methods is actually low: just a shortcut key and a habit of output.

What really determines whether users stick around is:

- Whether the output is controllable

- Whether it keeps causing annoying minor issues

- Whether it integrates into your existing workflow and payment structure

For me personally:

- The best and smoothest experience is still Miaoyan

- The one most likely to stick around is probably Zhipu

- Shandianshuo is more of a “foundation approach” and worth watching for how its enhancements evolve

These points are not contradictory.

Summary

- Miaoyan is more mature in engineering orientation, command capabilities, and input control

- Zhipu has practical advantages in stability and subscription bundling

- Shandianshuo takes a local-first + optional enhancement approach, with the key being how it balances “basic capability” and “enhancement cost”

- Who truly becomes the “default gateway” depends on reducing distractions, fixing frequent minor issues, and treating voice input as true “infrastructure” rather than an add-on feature

The competition among AI voice input methods is no longer about recognition accuracy, but about who can own the shortcut key you press every day.