Apollo is a local-first AI client designed specifically for mobile devices, focusing on privacy protection and low-latency experiences. It enables users to enjoy AI conversations and inference capabilities directly on their phones without relying on the cloud. This article provides a comprehensive overview of Apollo’s positioning, key features, technical architecture, and use cases, helping you understand its strengths and limitations.

Apollo — Bringing AI Within Reach

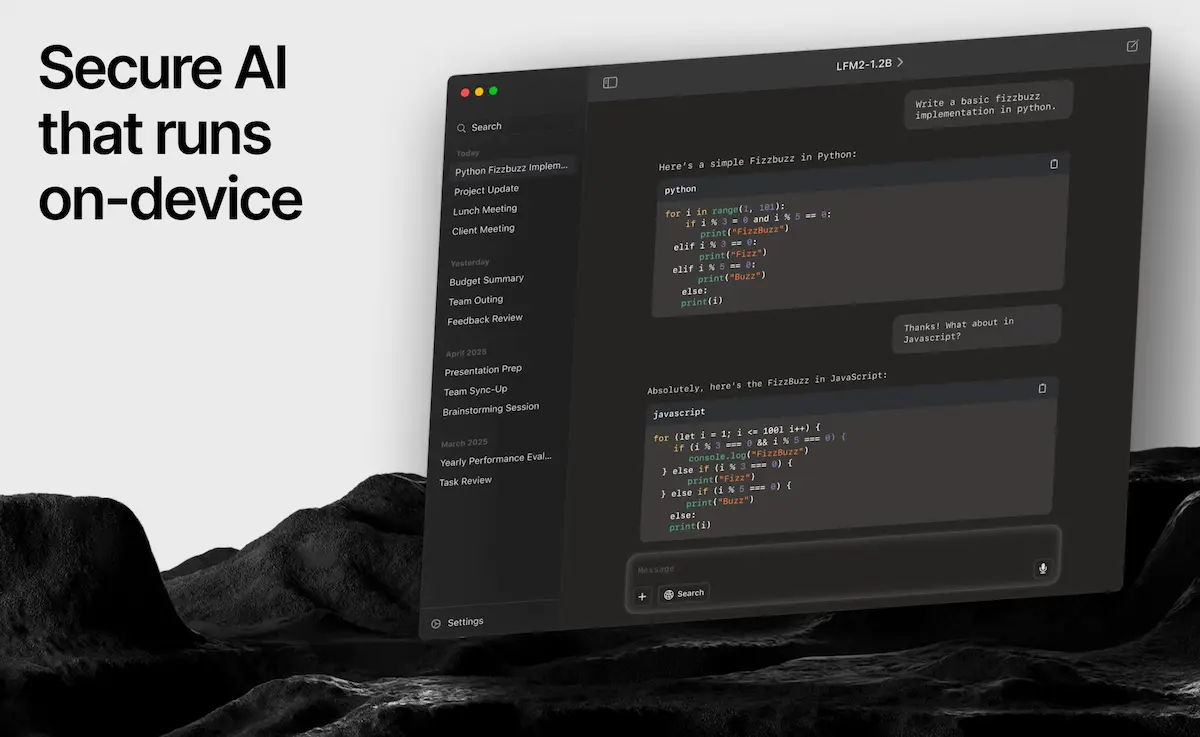

Apollo is a lightweight mobile AI client developed by Liquid AI, aiming to deliver a fully private, on-device AI experience without cloud dependency. As the flagship app of the Liquid Edge AI Platform (LEAP), Apollo serves as a primary entry point for users to experience edge AI and the “vibe-check model tone.”

Before diving into Apollo’s features, let’s look at its core positioning and main advantages.

Core Positioning and Advantages

| Dimension | Features / Strengths |

|---|---|

| Local & Private | All inference runs locally, with no conversations uploaded to the cloud, ensuring user data privacy and security. |

| Low Latency | Completely eliminates network delays, providing fast, real-time conversational experiences. |

| Model Flexibility | Supports running various small language models (SLMs) from the LEAP platform, as well as open-source or self-hosted LLMs. |

| Open & Extensible | Allows integration with OpenRouter APIs, external model backends, or self-hosted LLMs for flexible expansion. |

| Developer Friendly | Can be used as a “vibe-check device preview” tool, enabling developers to test model performance on mobile devices in real time. |

With these features, Apollo meets multiple needs for privacy, responsiveness, and model flexibility.

Key Features

Apollo offers a rich set of features suitable for various scenarios. Its main highlights include:

- Chat and Conversation: Smooth chat interface supporting multi-turn conversations with local models.

- Model Selection and Switching: Choose and switch between different models (including LEAP platform models or external models) as the conversation backend within Apollo.

- Local Model Support (Experimental): iOS supports running certain lightweight models locally, with ongoing enhancements planned.

- Custom Backend Integration: Connect to self-hosted model servers (local or private cloud LLMs), using Apollo as a frontend client.

- Version Updates and Performance Optimization: Continuous improvements to the chat interface, stability, and model invocation efficiency.

These features make Apollo suitable not only for general users but also as a convenient tool for developers and researchers to test and integrate models.

Technical Architecture and Platform Support

Apollo’s design and implementation are built on Liquid AI’s LEAP platform, providing a strong technical foundation.

- LEAP (Liquid Edge AI Platform): An AI deployment toolchain for device/edge environments, supporting efficient model deployment on iOS, Android, and more, balancing inference performance and model size.

- Liquid Foundation Models (LFM): Efficient small model architectures (such as LFM2) developed by Liquid, suitable for resource-constrained devices.

- Apollo is tightly integrated with LEAP, allowing users to experience LEAP platform models within Apollo and apply, debug, or deploy them to their own applications.

This architecture enables Apollo to unify local inference with flexible extensibility.

Target Users and Typical Scenarios

Apollo is designed for a variety of users and scenarios, meeting diverse needs:

- General users seeking privacy and responsiveness, wanting an AI chat tool without cloud dependency.

- AI researchers or model engineers needing to test model latency and usability in real mobile environments.

- Developers wishing to let users experience self-hosted models on mobile, achieving end-to-end integration.

- Edge computing, IoT, and unmanned device scenarios, using Apollo as a tool for testing and demonstrating on-device model performance.

These use cases highlight Apollo’s flexibility and practical value.

Limitations and Challenges

Despite its many advantages, Apollo faces some limitations in real-world applications:

- Model Size Constraints: Limited by device resources, best suited for small or streamlined models; large models or tasks with very long context are difficult to support.

- Model Capability Limits: Local models may lag behind large cloud models in comprehension, knowledge, and context memory.

- Hardware Compatibility Variations: On low-end or low-memory devices, model operation may be unstable or laggy.

- Feature and Licensing Restrictions: Some advanced models or commercial APIs require authorization from Liquid AI or third parties.

Developers and users should consider these factors when deploying Apollo and choose appropriate models and scenarios.

Summary

As a local AI client from Liquid AI, Apollo brings a new experience to mobile AI applications with its privacy protection, low latency, and flexible model integration. Both general users and developers can enjoy efficient and secure AI conversations on Apollo. In the future, as hardware and model technologies advance, Apollo is expected to support more features and powerful models, further driving the development of edge AI.