Introduction: New Trends Echoing History

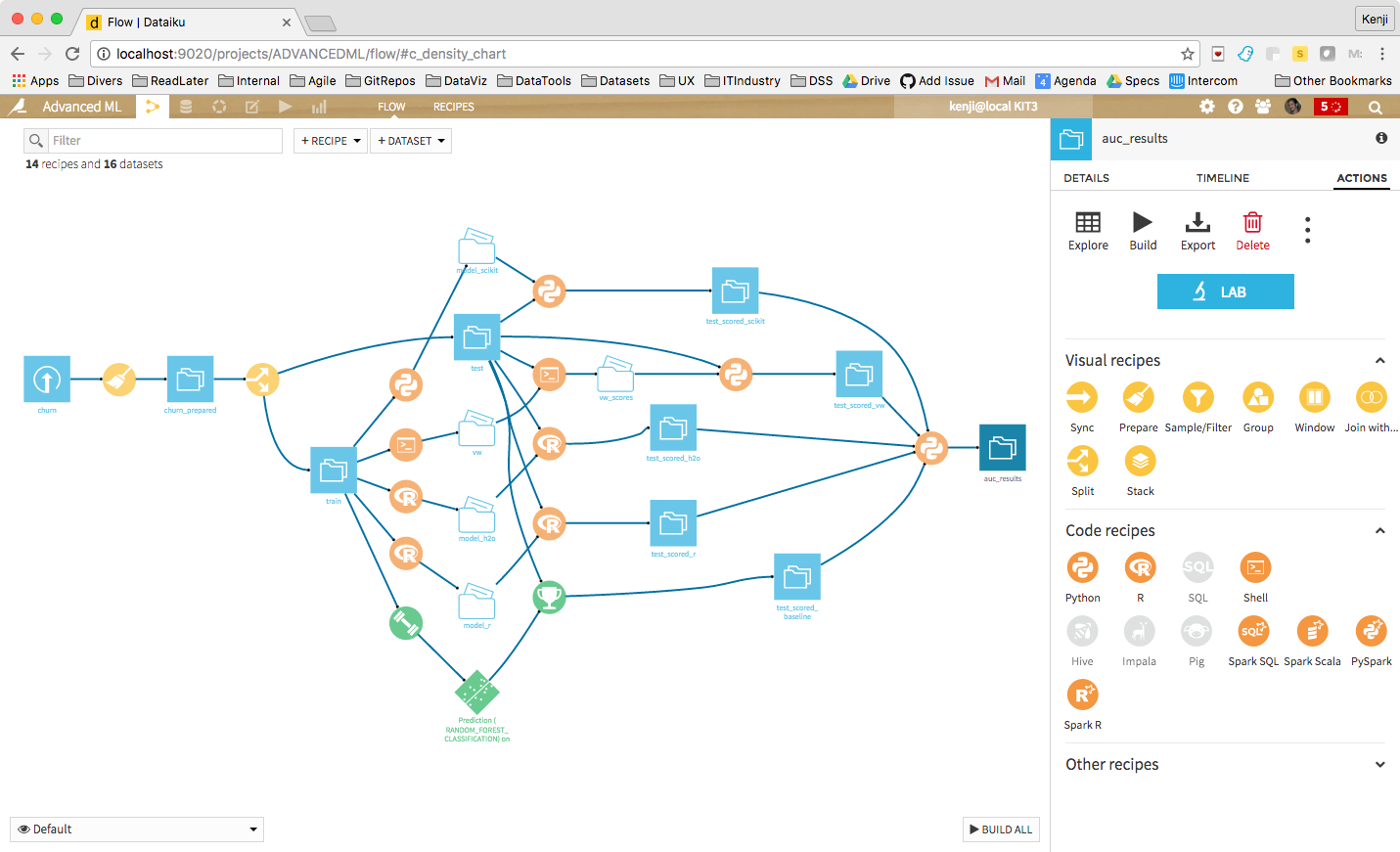

Since 2010, enterprises have adopted stream data processing to handle the explosion of log analytics, IoT data, and web clickstreams. Tools like Apache NiFi, StreamSets, and Dataiku enabled developers to build real-time data flows, ETL, and batch processing via visual nodes and drag-and-drop pipelines. Today, with breakthroughs in large language models (LLMs), similar AI agent workflow platforms (such as n8n, Coze, Dfiy) have emerged. These platforms also focus on drag-and-drop orchestration but support intelligent decision-making, context memory, and tool invocation. While they may look like “old wine in new bottles,” their core drivers have shifted from rules and SQL to model inference.

Having worked in big data myself and researched tools like Dataiku and StreamSets, I find the rise of AI agent platforms both familiar and transformative. These platforms not only inherit the visual orchestration concepts of the big data era but also achieve qualitative leaps in intelligent decision-making and automated execution.

This article analyzes three cases—Dataiku (transitioning from data platform to AI), StreamSets (data pipeline platform acquired by IBM and integrated into watsonx), and n8n (AI agent platform)—to explore the connections and differences between big data stream processing and AI agents, and how Dataiku evolved from an ETL tool to a full-stack AI-native platform.

Big Data Era Stream Processing: Frameworks, Features, and Limitations

The big data era saw the rise of various stream processing frameworks for real-time analytics and massive data handling. Typical tools included:

| Tool | Key Features | Limitations |

|---|---|---|

| Apache NiFi | Visual drag-and-drop data flow construction; supports 100+ sources/targets; ideal for ETL and log pipelines. | Lacks intelligent decision-making; manual rule configuration required. |

| IBM StreamSets | Cloud-native data integration platform; reusable pipelines; no-code drag-and-drop UI, predefined processors, dynamic adaptation to data changes; push-based processing with complex transformations directly in Snowflake. | Focused on data ingestion and ETL; limited semantic understanding of unstructured data. |

| Dataiku (Early) | Enterprise data science platform integrating ingestion, cleaning, feature engineering, model training, and deployment; drag-and-drop workflows for collaborative ETL. | AI capabilities mainly traditional ML; lacks model inference and language understanding. |

These tools use nodes (Processors) and connections to describe data flow (input → process → output), emphasizing data quality, visualization, and low-code. Decision logic is explicitly coded by developers, making it hard to handle complex context or automate task planning.

AI-Native Agents: Capability Leap Beneath a Familiar Interface

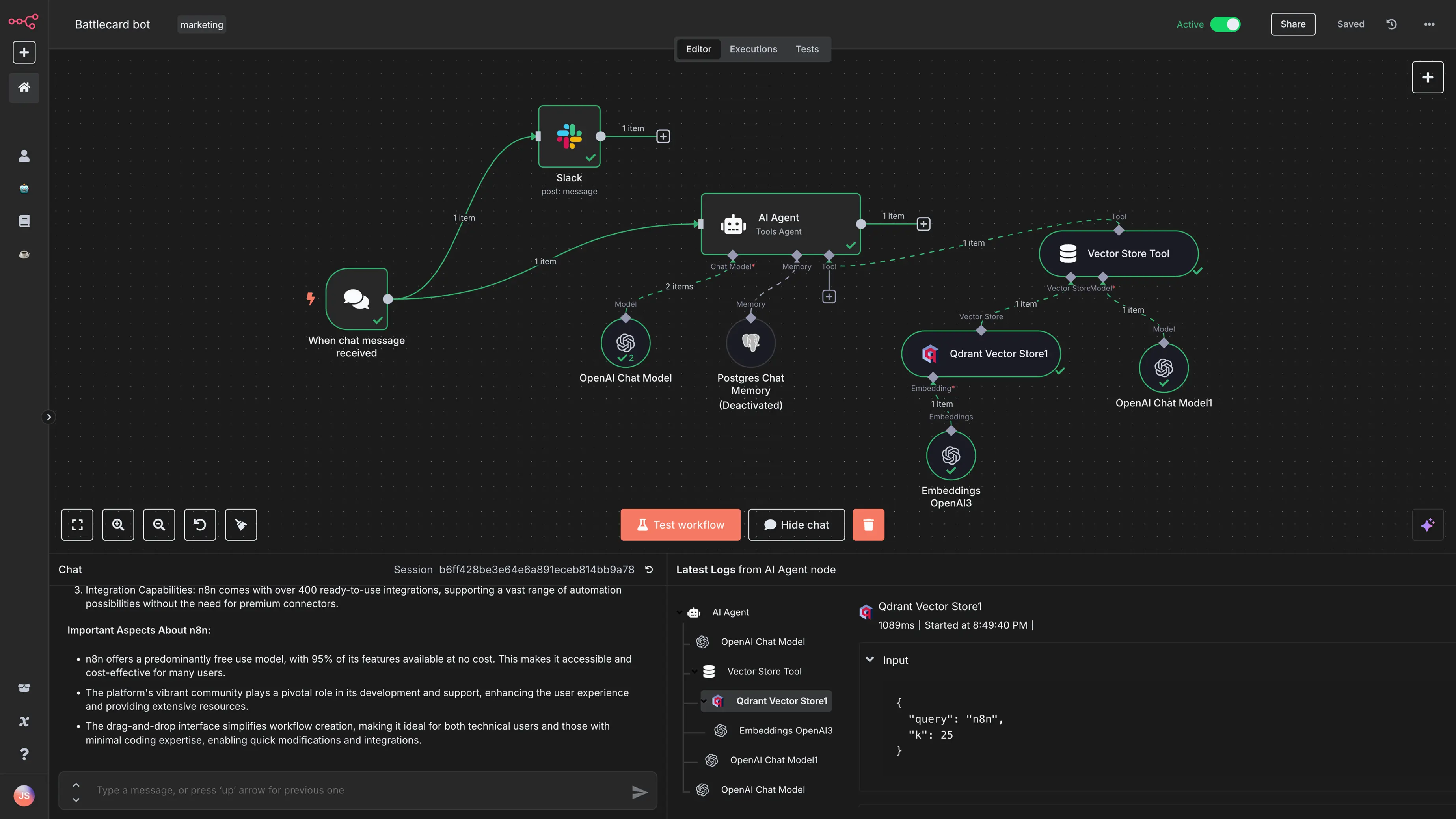

AI agent platforms inherit the “node + connection” engineering paradigm, but are powered by LLMs and external tool invocation. For example, n8n offers:

- Diverse node types: Drag-and-drop Input, LLM Node, Tool Call, Memory, etc., to build agent workflows for retrieval QA, data analysis, email replies, and more.

- Two trigger modes: n8n supports “user-activated” agents (chat-triggered) and “event-driven” agents (webhook/timer-triggered), suitable for real-time applications.

- LangChain integration: Most LangChain components are available as visual nodes; developers can drag nodes to invoke tools, vector DBs, or memory modules. Advanced users can write JavaScript to customize LangChain modules.

- Strong extensibility: Over 400 integrations for APIs, databases, SaaS; supports multiple LLMs (OpenAI, Google Gemini, etc.).

- LLM Agent features: LLM agents can decompose tasks, maintain context, call external tools, and learn from feedback. n8n’s guides highlight strategic planning, memory/context management, and tool integration as key enterprise agent capabilities.

These features enable AI agent workflows to automate not just data transfer but also complex decisions, text/code generation, knowledge retrieval, and continuous optimization based on feedback.

The Synergy of AI and Stream Data Processing

As noted in Confluent’s blog Life Happens in Real Time, Not in Batches: AI Is Better With Data Streaming:

- Real-time customer behavior analysis for improved experience and recommendations

- Industrial equipment monitoring and predictive maintenance to reduce downtime

- Financial fraud detection and algorithmic trading to lower risk

- IoT edge device anomaly detection and alerts

- Real-time optimization of supply chains and transportation

Data streams are the enterprise’s nervous system; AI/ML is the brain. Only by combining both can true intelligence and business agility be achieved.

Dataiku: From Data Platform to “Universal AI Platform”

Dataiku’s evolution exemplifies the convergence of big data stream processing and AI agent technologies. As an enterprise data platform, Dataiku has witnessed the shift from traditional ETL to intelligent decision-making, integrating LLM Mesh, AI Assistants, and agent capabilities into a unified platform. Below is a detailed look at Dataiku’s technical evolution, showing how it grew from a data pipeline tool to a universal AI platform, culminating in full agent capabilities in 2025.

Early Positioning and Stream Processing Capabilities

Founded in 2013, Dataiku started as an enterprise data science platform, integrating data preparation, feature engineering, visualization, and ML modeling. Users could design ETL flows via drag-and-drop, configure input, transformation, aggregation, and output nodes, or write Python/SQL code. These features aligned with NiFi and StreamSets’ visual pipeline concepts.

Transition to AI: LLM Mesh and Agent Capabilities

With the generative AI boom, Dataiku rapidly expanded its platform (2023–2025):

- LLM Mesh: Unified access to internal/external LLMs (Llama3, Gemma, Claude 3, GPT‑4o, etc.), supporting streaming output, cost control, custom parameters, multimodal input, smart chunking, knowledge base management, and RAG workflow optimization.

- Dataiku Answers & Prompt Studio: Dataiku Answers enables chat-based database queries and SQL generation; Prompt Studio helps design/test prompt templates, supporting chain-of-thought and vector search.

- AI Assistants: DSS 12 introduced AI Code Assistant (natural language code generation/explanation in Jupyter/VS Code), AI Prepare (auto-generates data cleaning steps from natural language), and AI Explain (auto-generates documentation for flows/code).

- Governance & Security: LLM Cost Guard, Quality Guard, Safe Guard for real-time monitoring of cost, quality, and security; automatic LLM component tagging for governance and approval.

2025: Full Release of AI Agent Capabilities

In April 2025, Dataiku launched AI Agents with Dataiku, enabling agent creation and management on the Universal AI Platform. Key features:

- Centralized agent creation/governance: Visual no-code environment for rapid agent building, plus code customization; built-in Managed Agent Tools, GenAI Registry, and Agent Connect for unified management, routing, and quality assurance.

- Secure execution: Agents run on approved models/infrastructure via LLM Mesh, with Safe Guard for content/prompt injection protection.

- Observability and cost monitoring: Trace Explorer, Quality Guard, Cost Guard for end-to-end monitoring of agent inputs/outputs, decision paths, performance, and cost.

- Scenario integration: Agents connect with existing data pipelines, MLOps workflows, and model governance, supporting Snowflake, Databricks, Microsoft, AWS, Google, and multi-cloud.

Subsequent partnerships:

- Financial Services Blueprint (2025/6/11): Dataiku and NVIDIA launched FSI Blueprint, combining Universal AI Platform, LLM Mesh, NVIDIA NIM microservices, NeMo, and GPU acceleration for fraud detection, customer service, risk analysis, etc. Prebuilt components enable rapid, compliant agent deployment, reinforced by Dataiku LLM Guard Services.

- HPE Unleash AI Ecosystem (2025/6/25): Dataiku and HPE partnered to deploy Universal AI Platform on HPE Private Cloud AI, integrating NVIDIA AI-Q Blueprint and NIM microservices for end-to-end generative AI and agentic systems.

Through these developments, Dataiku evolved from an ETL-focused “big data tool” to an enterprise universal AI platform. CTO Clément Sténac stated in a 2025 interview that Dataiku’s mission is to enable secure AI application development across models, data, and tools, supported by LLM Mesh, Guard Services, and governance.

StreamSets: Data Pipeline Platform Integrated into IBM’s AI Ecosystem

StreamSets’ trajectory is notable in the convergence of big data stream processing and AI. As a leading data pipeline platform, StreamSets built strong foundations in visual orchestration and cloud-native architecture, and through IBM’s acquisition, became a key part of the enterprise AI ecosystem. Here’s an overview of StreamSets’ positioning, features, and its new role post-acquisition.

Product Positioning and Features

StreamSets began as a cloud-native DataOps and data ingestion platform. Its Transformer for Snowflake service offers no-code drag-and-drop UI, predefined processors, and dynamic pipelines, enabling complex transformations directly in Snowflake.

Key features:

- Reduced onboarding time: Familiar visual UI shortens user ramp-up.

- Push-based transformation: Complex transformations executed inline in Snowflake, reducing data movement and cost.

- Self-service collaboration: Drag-and-drop canvas, built-in functions, and slowly changing dimension (SCD) support for cross-team collaboration and reuse.

- Dynamic adaptation: Pipelines adjust proactively to data schema or infrastructure changes.

These features give StreamSets a similar visual and low-code experience to NiFi and Dataiku.

IBM Acquisition: Data Platform Meets AI

In December 2023, IBM acquired StreamSets and webMethods for €2.13 billion, aiming to:

- Enhance watsonx data ingestion: StreamSets provides real-time ingestion/integration for IBM watsonx’s generative AI/data platform.

- Strengthen API management/integration: webMethods offers robust API/B2B integration for hybrid cloud environments.

- Unified platform and hybrid cloud: Combining StreamSets/webMethods delivers a modern, comprehensive data/app integration platform, accelerating AI and hybrid cloud transformation.

- Strategic AI investment: IBM’s CEO highlighted this as a core move for AI solutions and hybrid cloud; IBM also invested in VC funds and partnered with Hugging Face to accelerate generative AI.

Post-acquisition, StreamSets’ data pipeline capabilities are embedded in IBM watsonx, supporting generative AI with foundational data streams. IBM’s collaborations with Dataiku, HPE, and others show that data platforms are converging in the LLM era.

n8n: Open Source AI Agent Platform

Originating as an open-source automation framework, n8n has recently expanded its AI agent capabilities:

- Multiple AI integrations: Built-in nodes for OpenAI, Gemini, etc.; supports LangChain, LlamaIndex; connects to vector DBs and text-to-speech models.

- Low-code drag-and-drop: Users build agents by dragging LLM Node, Tool Call, Memory, Trigger, etc.; advanced users can write JavaScript in nodes for custom tools/logic.

- Rich templates and scenarios: Official templates for vector search, YouTube trend analysis, SQL data analysis assistant, web scraping agent, meeting slot suggestions, etc.; blog lists 15 business scenarios including visual scraping, SQL visualization, customer support, meeting summaries.

- Human-in-the-loop and automation: Supports both “human-activated” and “event-driven” agents; memory nodes enable context retention and long-term task execution.

These features lower the barrier for building intelligent workflows, bringing agentic automation to a wider developer audience.

Deep Insights: Connections, Differences, and Future Trends

- Architectural and interaction inheritance: NiFi/StreamSets and n8n all use node + connection visual orchestration, lowering entry barriers and enabling cross-team collaboration.

- Core driver transformation: Big data tools rely on hardcoded rules, SQL, scripts, or ETL logic; AI agent platforms use LLMs for decision-making, generating steps from prompts, parsing unstructured data, and dynamic tool invocation. Dataiku’s AI Prepare and AI Code Assistant exemplify this shift—users describe tasks in natural language to generate cleaning steps or code.

- Data flow vs. task flow: Traditional platforms output cleaned data or metrics; AI agent platforms produce “decisions” or “execution results.” For example, n8n’s SQL analysis agent returns reports directly; Dataiku Answers auto-generates SQL and queries databases.

- Governance and observability: As agent capabilities grow, enterprises face cost, compliance, and security risks. Dataiku offers centralized monitoring via LLM Mesh, Cost Guard, Quality Guard; n8n supports logging and conditional branches; StreamSets provides unified views and dynamic adaptation in Snowflake pipelines. Governance and monitoring are key to agent platform adoption.

- Ecosystem convergence: Dataiku’s partnerships with NVIDIA/HPE, IBM’s acquisition of StreamSets, and n8n’s integration with LangChain/vector DBs show AI and data platforms are merging. In the future, data pipelines, model governance, and agent orchestration may unify on a single platform, enabling closed-loop workflows from data ingestion to AI inference to application delivery.

Conclusion

The rise of AI agents brings us back to familiar “workflow orchestration” interfaces, but the driving force has shifted from rules to models. NiFi and StreamSets provided valuable engineering experience; Dataiku demonstrates how traditional platforms can evolve into AI-native platforms via LLM Mesh and agent tools; n8n shows how open source can democratize agentic automation.

As enterprises focus on security, cost, and compliance, governance and observability will become core differentiators for AI agent platforms. The “big data to AI” transformation path exemplified by Dataiku is likely to become an industry trend, with more traditional data vendors embracing agentic paradigms and achieving true convergence of data flow and intelligent decision-making.

References

- Generative AI With Dataiku: What’s New and What’s Next

- Generative AI in Dataiku: How It Started vs. How It’s Going

- AI Agents: Turning Business Teams From AI Consumers to AI Creators

- Release Notes — Dataiku DSS 14 (LLM Mesh, AI Code Assistant, AI Prepare)

- Dataiku and NVIDIA Announce FSI Blueprint at VivaTech 2025

- Dataiku Joins HPE Unleash AI Partner Program

- Your Practical Guide to LLM Agents in 2025 (+ 5 Templates for Automation)

- AI Agents Explained: From Theory to Practical Deployment

- 15 Practical AI Agent Examples to Scale Your Business in 2025

- Enterprise AI Agent Development Tools

- IBM Completes Acquisition of StreamSets and webMethods

- StreamSets’ Latest Release Improves User Productivity and Simplifies Complex Data Transformations in Snowflake

- AVOA: IBM’s Strategic Acquisition of StreamSets and webMethods