The greatest risks to modern internet infrastructure often aren’t in the code itself, but in those implicit assumptions and automated configuration pipelines that go undefined. Cloudflare’s outage is a wake-up call every Infra/AI engineer must heed.

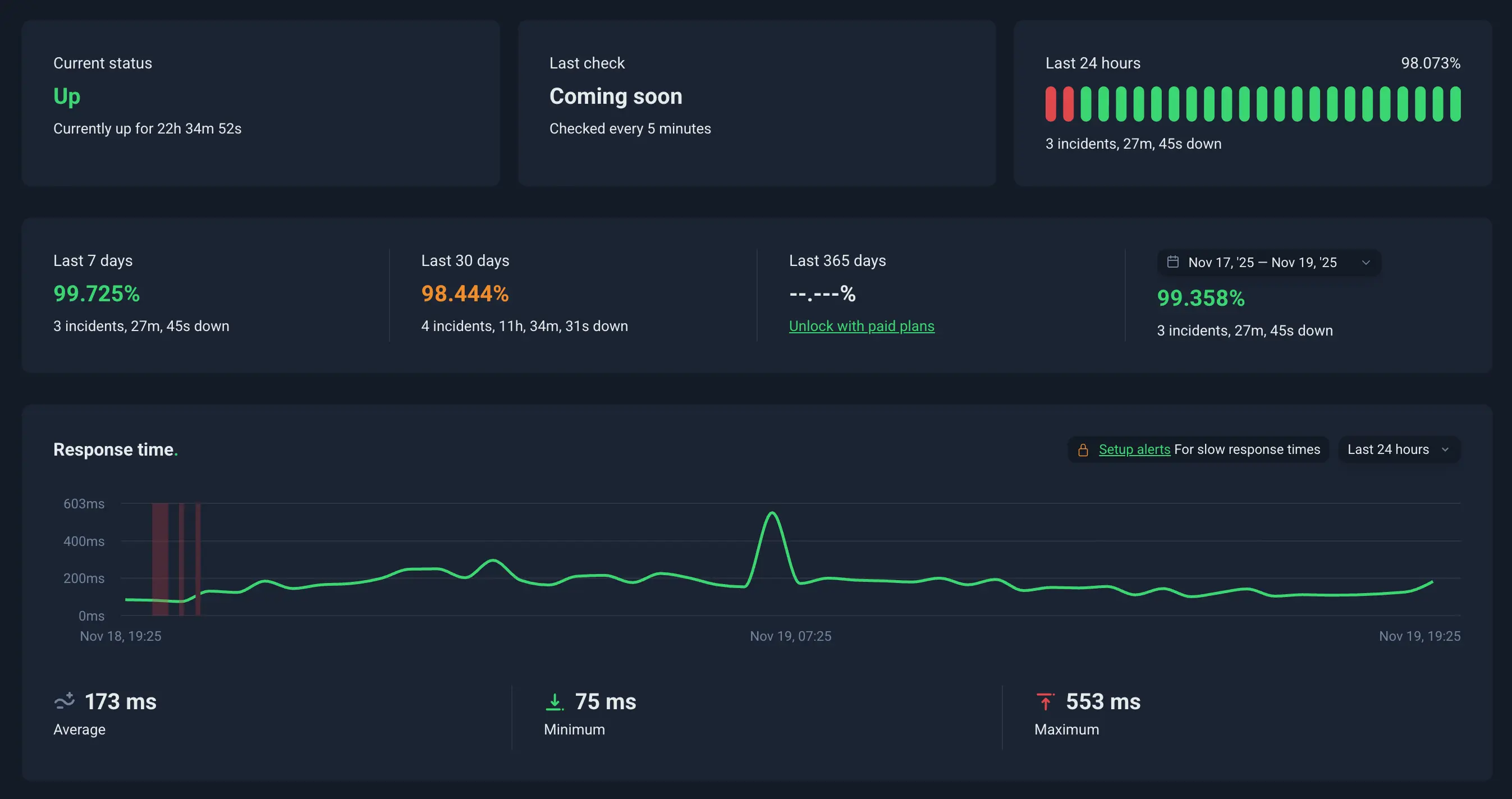

Yesterday (November 18), Cloudflare experienced its largest global outage since 2019. As this site is hosted on Cloudflare, it was also affected—one of the rare times in eight years that the site was inaccessible due to an outage (the last time was a GitHub Pages failure, which happened the year Microsoft acquired GitHub).

This incident was not caused by an attack or a traditional software bug, but by a seemingly “safe” permissions update that triggered the weakest link in modern infrastructure: implicit assumptions (Implicit Assumption) and automated configuration pipelines (Automated Configuration Pipeline). Cloudflare has published a blog post Cloudflare outage on November 18, 2025 explaining the cause.

Here is the chain reaction process of the outage:

- A permissions adjustment led to metadata changes;

- The metadata change doubled the lines in the feature file;

- The doubled lines triggered the proxy module’s memory limit;

- The memory limit caused the core proxy to panic;

- The proxy panic led to a cascade failure in downstream systems.

This kind of chain reaction is the most typical—and dangerous—systemic failure mode at today’s internet scale.

Root Cause: Implicit Assumptions Are Not Contracts

Let’s first look at the core hidden risk in this incident. The Bot Management feature file is automatically generated every five minutes, relying on a default premise:

The system.columns query result contains only the default database.

This assumption was not documented or validated in configuration—it existed only in the engineer’s mental model.

After a ClickHouse permissions update, the underlying r0 tables were exposed, instantly doubling the query results. The file size exceeded the FL2 preset of 200 features in memory, ultimately causing a panic.

Once an implicit assumption is broken, the system lacks a buffer and is highly prone to cascading failures.

Configuration Pipelines Are Riskier Than Code Pipelines

This incident was not caused by code changes, but by data-plane changes:

- SQL query behavior changed;

- Feature files were automatically generated;

- The files were broadcast across the network.

A typical phenomenon in modern infrastructure: data, schema, and metadata are far more likely to destabilize systems than code.

Cloudflare’s feature file is a “supply chain input,” not a regular configuration. Anything entering the automated broadcast path is equivalent to a system-level command.

Language Safety Can’t Eliminate Boundary Layer Complexity

A former Cloudflare engineer summarized it well:

Rust can prevent a class of errors, but the complexity of boundary layers, data contracts, and configuration pipelines does not disappear.

The FL2 panic stemmed from a single unwrap(). This isn’t a language issue, but a lack of system contracts:

- No upper-bound validation for feature count;

- File schema lacked version constraints;

- Feature generation logic depended on implicit behavior;

- Core proxy error mode was panic, not graceful degradation.

Most incidents in modern distributed systems (Distributed System) come from “bad input,” not “bad memory.”

Core Proxies Need Controllable Failure Paths

FL/FL2 are Cloudflare’s core proxies; all requests must pass through them. Such components should not fail with a panic, but have the following capabilities:

- Ignore abnormal features;

- Truncate over-limit fields;

- Roll back to previous versions;

- Fail-open or fail-close;

- Skip the Bot module and continue processing traffic.

As long as the proxy “stays alive,” the entire network won’t be completely paralyzed.

Data Changes Are More Uncontrollable Than Code Changes

The essence of this incident:

- Subtle permission changes;

- ClickHouse default behavior changed;

- Query results propagated to distributed systems;

- Automated publishing amplified the error;

- Edge proxies crashed due to uncontrolled input.

Future AI Infra (AI Infrastructure) will be even more complex: models, tokenizers, adapters, RAG indexes, and KV snapshots all require frequent updates.

In future AI infrastructure, data-plane risks will far exceed those of the code-plane.

Recovery Process Shows Engineering Maturity

During the incident, Cloudflare took several measures:

- Stopped generating erroneous feature files;

- Force-distributed the previous version of the file;

- Rolled back Bot module configuration;

- Ran Workers KV and Access outside the core proxy;

- Restored traffic in stages.

Restoring hundreds of PoPs worldwide simultaneously demonstrates a high level of engineering maturity.

Lessons for Infra/AI/Cloud Native Engineers

The Cloudflare event highlights four common risks in large-scale systems:

- Implicit assumptions fail;

- Configuration supply chain contamination;

- Automated publishing amplifies errors;

- Core proxies lack graceful degradation paths.

For AI Infra practitioners, these risks are even more relevant:

- Model weight updates without schema validation;

- Adapter merges may be contaminated;

- RAG index incremental builds are unstable;

- Inference graph configuration may be broken by bad data;

- Automatically rolled-out models may propagate errors network-wide.

AI engineering is replaying Cloudflare’s infrastructure dilemmas—just at greater speed and scale.

Summary of Former Cloudflare Engineer’s Views

His insights pinpoint the hardest problems in distributed systems:

- The issue isn’t code, but missing contracts;

- Not the language, but undefined input boundaries;

- Not modules, but lack of validation in the configuration supply chain;

- Not bugs, but absence of fail-safe mechanisms.

This incident proves: The real fragility in modern infrastructure lies in “behavioral boundaries,” not “memory boundaries.”

Summary

The Cloudflare November 18 outage was not a coincidence, but an inevitable result of modern internet infrastructure evolving to large-scale, highly automated stages.

Key takeaways from this event:

- System assumptions must be made explicit;

- Configuration pipelines must be validated;

- Automated publishing needs “dead-end” mechanisms;

- Core proxies must be designed with controllable failure paths;

- Data-plane contracts must be stricter than code-plane contracts.

In the AI-native Infra era, these requirements will only become more stringent.