Recently, while using Claude Code, I was often recommended to use OpenRouter as a proxy for model calls. It not only helps bypass IP blocks but also allows flexible use of BYOK (Bring Your Own Key). At first, I saw it as a temporary tool, but after a deeper look, I found it’s much more than just a “relay proxy.”

There’s a lot to study behind this platform. Especially during my recent research on AI Gateways (such as Portkey, Kong AI Gateway, Envoy AI Gateway, LiteLLM), I also came across OpenRouter. So, taking the opportunity with Claude Code, I decided to observe and summarize my findings on OpenRouter.

Below is a mind map analyzing OpenRouter’s features, ecosystem, and comparisons as an AI model aggregation gateway.

Click to toggle the mind map - Openrouter Mindmap

What is OpenRouter?

OpenRouter provides a unified API interface, allowing developers to call APIs from multiple large model providers simultaneously, such as OpenAI, Anthropic, Mistral, Google Gemini, and even some Chinese providers like Kimi and Qwen.

Its features include:

- A unified

/v1/chat/completionsinterface, compatible with OpenAI API - Model routing, rate limiting, and fallback strategies

- Support for your own API Key (BYOK) or using the platform’s key

- Open-sourced some SDKs and built an active Discord community

Highlights I Observed

While researching OpenRouter, I found the following highlights:

- Very fast model integration—new popular models appear on OpenRouter soon after release (e.g., Kimi K2, Claude 3.5, Command R+, etc.)

- Developer-oriented community—many use it to quickly integrate new models or bypass certain access restrictions

- Provides a visual configuration interface—easy to set up routing and calls

- Transparent pricing—token-based billing, 5% platform fee for BYOK

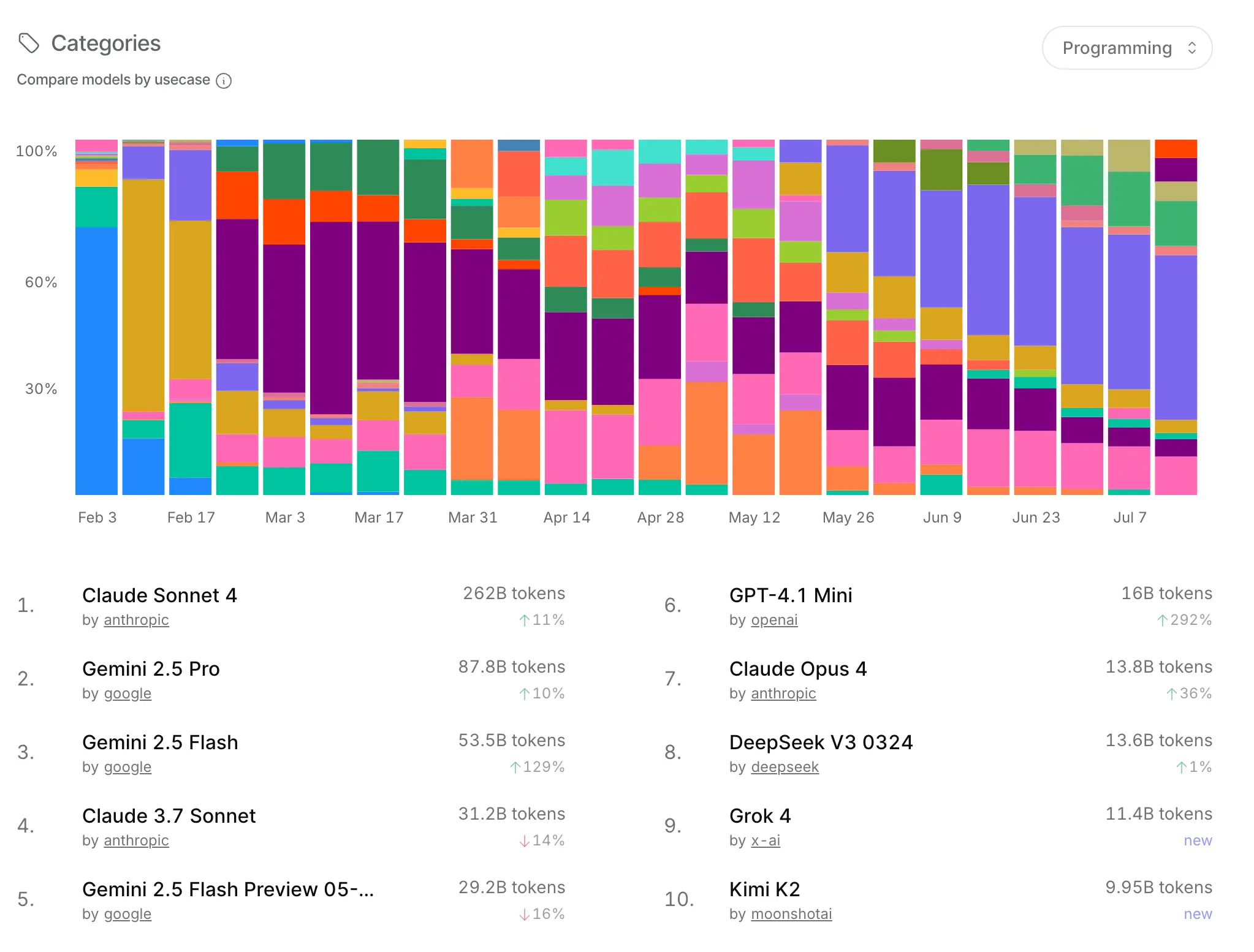

Additionally, the large model rankings provided by OpenRouter match my actual experience. For example, on July 15, 2025, Claude 4 Sonnet ranked first in programming model calls, which is also my go-to model for its cost-effectiveness and performance.

How is it Different from Other AI Gateways?

During my AI Gateway research, I also looked into several mainstream solutions. Here’s a brief comparison:

| Product | Features | Suitable Scenarios |

|---|---|---|

| OpenRouter | Plug-and-play, API aggregation, active community | Lightweight integration, multi-model trial, bypassing restrictions |

| Portkey | Focus on routing and rate limiting, commercial SLA | Enterprise deployment, quota control, flexible policies |

| LiteLLM | Open-source Python middleware, unified interface | Quick integration of self-hosted or BYOK models |

| Kong AI Gateway | Integrated with Kong, strong plugin system | AI extension for existing API Gateway users |

| Envoy AI Gateway | AI traffic control and observability based on Envoy | Service mesh + unified AI management |

OpenRouter is more like a “developer-friendly AI model aggregator.” It doesn’t emphasize enterprise-level security and SLA, but rather openness, flexibility, and rapid onboarding.

Business Model Analysis

OpenRouter does not charge a subscription fee, but:

- Provides official API keys: charged by token

- Supports BYOK: users use their own model keys, and the platform only charges a 5% service fee

- Has a simple credit system for quota and priority management

- No private deployment option yet

Its core value lies in solving the ‘unified entry’ problem for model calls and building services (rate limiting, logging, routing, fallback, etc.) around this entry, much like early API Gateway products.

My Thoughts

- OpenRouter is more like a “Cloudflare between models,” aiming to establish a unified hub between LLM providers and application developers

- Although currently lightweight in features, its focus on the essential entry point for model calls means it could evolve into a key part of AI infrastructure

- For this reason, many vendors want to “build an OpenRouter,” even as a strategic tool for building AI entry points and ecosystem data

Final Words

I’m still exploring OpenRouter’s capabilities and evaluating whether it fits into my own development environment. If you’re also working on multi-model integration, AI Gateway, or Agent Infra, it’s worth keeping an eye on this project.

If I get a chance to try enterprise features of other AI Gateways (like Portkey Pro or self-hosted solutions), I’ll write a comparative review in the future.